This could prove very useful, but I'll need to do some testing to see how it works.Just add the following line to your openssl.cnf file, in the [ v3_ca ] section:

subjectAltName = DNS:http://www.test.com,DNS:*.kensystem.com,DNS:*.etc.com

Apache2 Lounge: How-to More than one domain with your SSL

EclecticGeek

Monday, May 28, 2007

SSL: One Certificate, Multiple Domains

I was out trying to refresh my memory on how to set up SSL on Apache for Windows when I ran across a useful tip for allowing a single certificate to validate against multiple domains.

Apache+SSL on Windows

I wanted to get SSL working on my server to enable a bit of security for things like checking mail remotely. Plus, there's always the possibility of me doing other things in the future with it.

Unfortunately, setting up SSL in Apache isn't nearly as easy as it is in IIS. Microsoft really does do a good job (eventually) with their GUI tools. The steps aren't really all that onerous, but a full step-by-step is difficult to find. I won't go into all the details, but this is enough to get going for me.

The first thing we need to do is, obviously, install Apache. I won't go into the details of configuring the server, that can easily take up a series of separate posts. For me, I found the Apache Lounge (AL) install after I had already set up using the MSI from the Apache Software Foundation (ASF). The nice thing about the AL install is that it will just drop in on top of the ASF one (be sure to back up your configuration files) and you get the benefit of the service already having been installed and the monitor set to run. Not that it's all that difficult to do, but it's nice to have it done for you.

Next, install OpenSSL. The process is fairly straightforward.

Now the tricky part, enable SSL in Apache. There's a lot of voodoo here related to PKI and encryption schemes, etc. There are a few tutorials out there (see the references), but I'll summarize here for brevity.

Performing a minor version upgrade is pretty simple:

Also note: this is a work in progress. Unfortunately I didn't write this stuff down when I initially set up SSL so I'm going based off my spotty memory and information I could find online.

References:

Unfortunately, setting up SSL in Apache isn't nearly as easy as it is in IIS. Microsoft really does do a good job (eventually) with their GUI tools. The steps aren't really all that onerous, but a full step-by-step is difficult to find. I won't go into all the details, but this is enough to get going for me.

The first thing we need to do is, obviously, install Apache. I won't go into the details of configuring the server, that can easily take up a series of separate posts. For me, I found the Apache Lounge (AL) install after I had already set up using the MSI from the Apache Software Foundation (ASF). The nice thing about the AL install is that it will just drop in on top of the ASF one (be sure to back up your configuration files) and you get the benefit of the service already having been installed and the monitor set to run. Not that it's all that difficult to do, but it's nice to have it done for you.

Next, install OpenSSL. The process is fairly straightforward.

Now the tricky part, enable SSL in Apache. There's a lot of voodoo here related to PKI and encryption schemes, etc. There are a few tutorials out there (see the references), but I'll summarize here for brevity.

- Optional: set up your private key through OpenSSL:

openssl genpkey -algoritm RSA -out key.pem(you don't have to do this as a private key can be generated for you in the next step) - Generate your certificate signing request (CSR) that you will provide your certificate authority (such as CAcert):

openssl req -config openssl.cnf -new -out my-server.csrIf you've already created your private key you have to specify it:openssl req -key keyfile.pem -config openssl.cnf -new -out my-server.csr - At some point you have to remove the passphrase from the private key:

openssl rsa -in privkey.pem -out my-server.keyIf you don't do this you'll have to type in the passphrase every time you start Apache ... which isn't an option if you're running Apache as a service. This does present a problem in that now your private key can be used by anyone who can get access to it. So be sure to keep your private key secured on your file system. Preferably somewhere only you (as an administrator) and your server user can access it. - Submit the CSR to your CA and then drop the key they give you into your certificate/key store.

- Configure Apache (this may vary slightly depending on the version of Apache, I'm using 2.2.x) (trimmed to the stuff relevant to SSL, don't forget any other important parameters such as the document root):

# Load SSL Module and instantiate

LoadModule ssl_module modules/mod_ssl.so

<ifmodule ssl_module>

SSLRandomSeed startup builtin

SSLRandomSeed connect builtin

</ifmodule>

# Set up SSL

SSLCertificateKeyFile "server.key"

Listen 443

AddType application/x-x509-ca-cert .crt

AddType application/x-pkcs7-crl .crl

SSLPassPhraseDialog builtin

SSLSessionCache "shmcb:ssl_scache(512000)"

SSLSessionCacheTimeout 300

SSLMutex default

#Virtual Host Setup

<virtualhost *:443>

ServerName www.eclecticgeek.com

SSLEngine on

SSLCertificateFile site.crt

SSLCipherSuite ALL:!ADH:!EXPORT56:RC4+RSA:+HIGH:+MEDIUM:+LOW:+SSLv2:+EXP:+eNULL

<filesmatch "\.(cgi|shtml|phtml|php)$">

SSLOptions +StdEnvVars

</filesmatch>

BrowserMatch ".*MSIE.*" \

nokeepalive ssl-unclean-shutdown \

downgrade-1.0 force-response-1.0

RewriteEngine on

RewriteCond %{HTTPS} off [OR]

RewriteRule ^/(.*) https://%{HTTP_HOST}:443/$1 [L,R]

</virtualhost>

Performing a minor version upgrade is pretty simple:

- Install the updated OpenSSL package.

- Since we're using the Apache Lounge build of Apache2 with OpenSSL compiled in we need to update Apache as well.

- Download the zipped package

- Extract into a new directory

- Stop the Apache service

- Rename the previous install's directory

- Rename the new install's directory to match that used previously

- Copy the cert and conf directories from the previous install to the new one

- Start the service.

- Should be done with the upgrade, test to make sure.

Also note: this is a work in progress. Unfortunately I didn't write this stuff down when I initially set up SSL so I'm going based off my spotty memory and information I could find online.

References:

- Apache Lounge (source of updated binary of Apache + OpenSSL, and good forums for Apache on Windows admins)

- OpenSSL

- Shining Light Productions: Win32 OpenSSL

- jmSolutions: mod_ssl and OpenSSL for Apache using Win32 (setup tutorial)

Sunday, November 12, 2006

More Anti-Spam Techniques

The current version of hMail has greylisting as a built-in option. I've enabled that and easily seen a 90% drop in spam. Greylisting does entail a minor delay in e-mail delivery, but it's usually so minor that you the delay has no real effect (most mail servers try again within a few minutes).

On top of greylisting I still have my e-mail validation script. I no longer redirect based on the results of this script, but I still add a results header. The header is now used as a weighting factor by SpamAssassin (SA).

Yes, I've finally implemented the big gun. One of the reasons it's taken so long is that setting up SA isn't a quick install since it is originally written for *nix. And while the installation process isn't overly difficult, the tuning aspect of using SA is most difficult for the uninitiated.

Luckily there is plenty of good documentation out there and I was able to get things up and running over the course of a few days. So, here's a quick run-down on how to get SA running on Windows 2000:

A note regarding internal/trusted IPs. I have only one public IP, so all the devices on my network use an IP from one of the private ranges. Unfortunately this can have an ill affect on SA when there's only one Received header (e.g. the e-mail came directly from the originating server). SA assumes that the first public IP must be from a trusted MX. The result of which is that pretty much every directly-connecting, originating mail server triggers the ALL_TRUSTED rule. This significantly decreases the spam caught by SA. Specifying your trusted/internal IPs in the configuration file fixes the error. For the nitty gritty, see the following

I was thinking it might be a good idea to rewrite my sender verification script so that it runs as a native SA test. That might be more work than I'm willing to put in, though, considering some of the options I'd have to take into account such as the perl modules the script relies on and the disk-based cache.

It would be nice to provide an easy way to parse messages through the Bayesian filter. I'm not sure what would work best, but I was thinking of adding a header that contains a URL that will parse a message as spam if it was previously considered not spam and vice versa. This is something I'll need to research.

On top of greylisting I still have my e-mail validation script. I no longer redirect based on the results of this script, but I still add a results header. The header is now used as a weighting factor by SpamAssassin (SA).

Yes, I've finally implemented the big gun. One of the reasons it's taken so long is that setting up SA isn't a quick install since it is originally written for *nix. And while the installation process isn't overly difficult, the tuning aspect of using SA is most difficult for the uninitiated.

Luckily there is plenty of good documentation out there and I was able to get things up and running over the course of a few days. So, here's a quick run-down on how to get SA running on Windows 2000:

- Download SA for Windows (version 3.1.7.0 as of this writing). I grab the SpamAssassin command line tools, sa-learn, and sa-update.

- Install (I chose to place it next to Apache in "Program Files\Apache Software Foundation"

- Configure SA (using local.cf). Really you can keep the defaults if you want, but I made a few modifications since I didn't want subject modification (only headers), needed to add a test to check sender validation based on the results of my custom script, wanted to specify the Bayesian filter data store path, and needed to specify the internal/trusted network information.

- Set up spamd to run as a service. Use whatever software you like, I've been using XYNTService by Xiangyang Liu available from The Code Project. It's simple and works well enough in this situation.

- Set up hMail to run SA. I do this via event handler scripting. During the OnDeliveryStart event subprocedure I run a batch file that scans the incoming message and writes the results to disc. The results are then copied back over the original, which hMail then uses for further processing.

- Now set up sa-update so that you can ensure your SA rules are up-to-date. I do this through a Windows Scheduled Task.

A note regarding internal/trusted IPs. I have only one public IP, so all the devices on my network use an IP from one of the private ranges. Unfortunately this can have an ill affect on SA when there's only one Received header (e.g. the e-mail came directly from the originating server). SA assumes that the first public IP must be from a trusted MX. The result of which is that pretty much every directly-connecting, originating mail server triggers the ALL_TRUSTED rule. This significantly decreases the spam caught by SA. Specifying your trusted/internal IPs in the configuration file fixes the error. For the nitty gritty, see the following

- SpamAssassin Wiki: TrustPath

- SpamAssassin Wiki: TrustedRelays

- Apache Mailing List: All_TRUSTED (not)

I was thinking it might be a good idea to rewrite my sender verification script so that it runs as a native SA test. That might be more work than I'm willing to put in, though, considering some of the options I'd have to take into account such as the perl modules the script relies on and the disk-based cache.

It would be nice to provide an easy way to parse messages through the Bayesian filter. I'm not sure what would work best, but I was thinking of adding a header that contains a URL that will parse a message as spam if it was previously considered not spam and vice versa. This is something I'll need to research.

Tuesday, August 22, 2006

PC Hardware: Dell Precision 220

I recently changed servers from a custom-built PC I had to my old Dell Precision 220. Mostly I made the choice because the processor in the custom PC is a tad bit faster than the 220's. It's running an Athlon 1GHz (of the Thunderbird variety) where as the 220 is running a Pentium III. True, the 220 can accommodate two P3s ... but not many of the apps I use take advantage of that fact. The Dell is also a little bit better engineered so it works a little better as a server.

I purchased a number of upgrades to turn the 220 into a worthy server. In no particular order:

Most of the the other components I bought new because I didn't want used parts. This machine will be pretty well abused with all the services running on it: Beyond TV, music, web, mail, etc.

A note on the SATA controller/hard-drives I bought. I was specifically looking for parts capable of a specific SATA feature, Native Command Queue. From what little I've read this feature doesn't provide much use on a desktop (at least not yet). But since this machine is being used more as a server I think it'll be important.

Most of the upgrades were painless, but I did run into some minor problems with the power supply:

First, Dell uses a custom pin configuration on the motherboard's power connector. During installation I noted that the PCPower part had a different pin-out from the Dell part. After some conferring with the PCPower techs, however, I was assured that the Silencer would work without issue ... and it did.

Second, while installing the power supply I realized that Dell customized their part further (beyond the pin out customization) by moding the shell. Two notches are cut in the top of the power supply that help hold it in place, along with a lone screw. I didn't want to risk any problems with the Silencer falling out of place so I got around the issue by using two zip ties.

Third, some time after installing the Silencer I noticed that my APC was switching to battery mode enough to drain the battery. That was a big problem. At first I thought the unit was having problems due to continuous high voltage (hovering around 128 quite often). But after looking through the APC knowledge base I found a few articles that talked about Total Harmonic Distortion (THD) and how it can cause the UPS to switch to battery mode. Switching the sensitivity of the UPS to "medium" seems to have addressed that problem.

I purchased a number of upgrades to turn the 220 into a worthy server. In no particular order:

- A drive cage from a newer Dell model (off eBay), capable of holding four drives versus the 220s measly two

- Two p3s running at 1GHz (off eBay)

- A new Kingston 512MB RDRAM module from memorysuppliers.com (the 220 doesn't need the modules to be paired)

- A new Dell-compatible power supply from PC Power & Cooling, the Silencer 360 Dell

- An APC Back-UPS RS 800VA

- A Promise FastTrak TX4200

- Two SATA II 320GB Seagate 7200.10 drives (model # ST3320620AS).

Most of the the other components I bought new because I didn't want used parts. This machine will be pretty well abused with all the services running on it: Beyond TV, music, web, mail, etc.

A note on the SATA controller/hard-drives I bought. I was specifically looking for parts capable of a specific SATA feature, Native Command Queue. From what little I've read this feature doesn't provide much use on a desktop (at least not yet). But since this machine is being used more as a server I think it'll be important.

Most of the upgrades were painless, but I did run into some minor problems with the power supply:

First, Dell uses a custom pin configuration on the motherboard's power connector. During installation I noted that the PCPower part had a different pin-out from the Dell part. After some conferring with the PCPower techs, however, I was assured that the Silencer would work without issue ... and it did.

Second, while installing the power supply I realized that Dell customized their part further (beyond the pin out customization) by moding the shell. Two notches are cut in the top of the power supply that help hold it in place, along with a lone screw. I didn't want to risk any problems with the Silencer falling out of place so I got around the issue by using two zip ties.

Third, some time after installing the Silencer I noticed that my APC was switching to battery mode enough to drain the battery. That was a big problem. At first I thought the unit was having problems due to continuous high voltage (hovering around 128 quite often). But after looking through the APC knowledge base I found a few articles that talked about Total Harmonic Distortion (THD) and how it can cause the UPS to switch to battery mode. Switching the sensitivity of the UPS to "medium" seems to have addressed that problem.

Windows Weirdness: SB Live!

"Holy crap, you're still using a Live!?" Yes. Yes I am. This is my work pc so it doesn't need the latest an greatest. Hell, even my play pc isn't that up to date anymore (though it's good enough for Final Fantasy VII).

Anyway ... this post isn't (necessarily) about antiquated technology. Ever since getting this pc repurposed as a work machine I've had a problem with the sound stuttering. I let it go for a while, but decided I couldn't take it any more and so decided to try to do something about it.

My first clue that something was amiss was in looking at the XP device manager. When viewing by resource type just about all the PCI devices on my system were assigned IRQ 9. Weird. Not totally unheard of thanks to IRQ sharing in modern PC's ... but weird non-the-less. It gave me an idea for what could be wrong so I did a few searches on Google.

Suffice it to say there seems to be plenty of blame to go around. Nobody can agree on who's at fault, but it looks to me like a confluence of factors causing issues.

I would like to point out two resources, however. The first one I'm pointing out not because it's necessarily the best one out there. I'm pointing it out because it got me where I needed to go by leading me to the second reference once I started down the path of this work-around.

So first we have Irq weirdness on usenet. Since the problem appears to be related to overuse of a single IRQ resource the obvious work-around would be to modify the IRQ used by the various devices. Unfortunately, if your computer type is specified as ACPI (see the "Computer" group in Device Manager) you're out of luck. Since the goal of ACPI is to automatically manage resource allocation to avoid conflicts, manual allocation is disabled.

To regain this functionality you have to modify the computer type to be "Standard PC" ... which I did by "updating" the driver for the ACPI device to the "Standard PC" driver. Making this change can have its own pitfalls (not the least of which is resource conflicts). Luckily my motherboard is more than capable of managing resource usage so once I rebooted everything worked fine. I still had a shared IRQ between the SB Live! and the built-in ATA66 contoller. I still couldn't change the settings from within Windows so I rebooted, entered the BIOS, and specified the IRQ I wanted to use for the PCI slot containing the sound card.

Doing this caused Windows to lose track of ... well ... everything. All system drivers (and I do mean all) had to be rediscovered. This meant redoing the display settings, redefining my wireless connections, etc. Luckily I have yet to run into any major problems otherwise.

The one issue I did encounter that was going to be extremely annoying was that my computer would no longer shut down by itself. I was getting the classic "It is now safe to turn off your computer" message. I found the answer to this one, and my second reference, from Microsoft. See the aptly named KB "It is Now Safe to Turn Off Your Computer" error message when you try to shut down your computer. The procedure basically has to do with disabling the ACPI driver and installing the driver for APM support.

After all is said and done I now seem to be stutter free.

Anyway ... this post isn't (necessarily) about antiquated technology. Ever since getting this pc repurposed as a work machine I've had a problem with the sound stuttering. I let it go for a while, but decided I couldn't take it any more and so decided to try to do something about it.

My first clue that something was amiss was in looking at the XP device manager. When viewing by resource type just about all the PCI devices on my system were assigned IRQ 9. Weird. Not totally unheard of thanks to IRQ sharing in modern PC's ... but weird non-the-less. It gave me an idea for what could be wrong so I did a few searches on Google.

Suffice it to say there seems to be plenty of blame to go around. Nobody can agree on who's at fault, but it looks to me like a confluence of factors causing issues.

- VIA's Apollo KT133 chipset is implicated in a few places for the IRQ madness. Perhaps true, but my Asus A7V seems more than capable otherwise so I'm willing to give VIA some slack on this one.

- Creative's SB Live! has gotten it's share of dress-downs. No doubt the drivers are a little suspect for XP since the card is fairly old. And I don't doubt it's a bit of a resource hog (on the PCI bus that is) since it's the only component that was having problems. I saw at least one post noting that the card has a poor ACPI interface header. I don't know much about that last one, so I won't comment on that particular ... um ... comment.

- Windows XP once again shows it's immaturity. "Wha?" you say. I feel Microsoft can take some (or a lot) of the blame for this one. ACPI is supposed to alleviate problems with resource conflicts, but somehow it's just made them worse in this instance. Why doesn't the system see a preponderance of resource usage piling up on a single IRQ and address the problem? I know it probably has to partially due with the ACPI standard, but Microsoft had a hand in defining and implementing that standard. I think they could have done better.

I would like to point out two resources, however. The first one I'm pointing out not because it's necessarily the best one out there. I'm pointing it out because it got me where I needed to go by leading me to the second reference once I started down the path of this work-around.

So first we have Irq weirdness on usenet. Since the problem appears to be related to overuse of a single IRQ resource the obvious work-around would be to modify the IRQ used by the various devices. Unfortunately, if your computer type is specified as ACPI (see the "Computer" group in Device Manager) you're out of luck. Since the goal of ACPI is to automatically manage resource allocation to avoid conflicts, manual allocation is disabled.

To regain this functionality you have to modify the computer type to be "Standard PC" ... which I did by "updating" the driver for the ACPI device to the "Standard PC" driver. Making this change can have its own pitfalls (not the least of which is resource conflicts). Luckily my motherboard is more than capable of managing resource usage so once I rebooted everything worked fine. I still had a shared IRQ between the SB Live! and the built-in ATA66 contoller. I still couldn't change the settings from within Windows so I rebooted, entered the BIOS, and specified the IRQ I wanted to use for the PCI slot containing the sound card.

Doing this caused Windows to lose track of ... well ... everything. All system drivers (and I do mean all) had to be rediscovered. This meant redoing the display settings, redefining my wireless connections, etc. Luckily I have yet to run into any major problems otherwise.

The one issue I did encounter that was going to be extremely annoying was that my computer would no longer shut down by itself. I was getting the classic "It is now safe to turn off your computer" message. I found the answer to this one, and my second reference, from Microsoft. See the aptly named KB "It is Now Safe to Turn Off Your Computer" error message when you try to shut down your computer. The procedure basically has to do with disabling the ACPI driver and installing the driver for APM support.

After all is said and done I now seem to be stutter free.

Monday, August 14, 2006

hMail: Resource Allocation Limitations

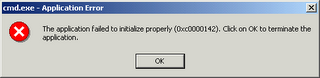

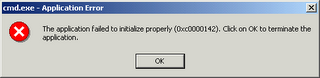

I've been running into some serious problems with my external message processing scripts. It appears that over time the server builds up processes that haven't exited. After a while so many processes build up that the server is unable to run any more and pop-up error message is generated for each additional attempt.

I was able to find some information (see references) that indicate that this is basically a desktop memory heap overflow. The server needs a discreet amount of memory for each object that is created on the desktop. In this case each command line window. But there is a limit to the number of objects that can be allocated (a limit on the total memory used by the desktop heap).

In essense, as processes are run by any particular "user" the memory allocation for that user is used up. If the processes are not cleaned up appropriately (e.g. terminated when complete) the allocation for that process is not released.

I've been able to mitigate the frequency of the problem by assigning the hMail service to a particular user. This is something I had intended on doing anyway, but I'll need to go back and check to make sure that the security and auditing settings are valid once all users have been created.

I had one thought as to the cause of the process build-up: could it be due to the remote server disconnecting when hMail takes too long to process the message? Basically the timeout being reached and the remote server disconnecting? I think this is a possibility because I had to set up my custom scripts to run while the SMTP session is still active. I'll have to run some tests to try and figure out if this is what's happening.

Whether or not this is the case, I'm thinking of switching to the

References:

Searched google for "windows 2000 SharedSection"

Update 2006-08-20:

A quick test of the server indicates that the problem is not due to a premature disconnect. I would like to know why these processes aren't ending, but I think it's more important in the meantime to make sure they're killed if they take too long.

I was able to find some information (see references) that indicate that this is basically a desktop memory heap overflow. The server needs a discreet amount of memory for each object that is created on the desktop. In this case each command line window. But there is a limit to the number of objects that can be allocated (a limit on the total memory used by the desktop heap).

Every desktop object on the system has a desktop heap associated with it. The desktop object uses the heap to store menus, hooks, strings, and windows. The system allocates desktop heap from a system-wide 48-MB buffer. In addition to desktop heaps, printer, and font drivers also use this buffer. (1)This is a problem for services as well. But to compound the problem any service which runs under the localsystem account with "Allow service to interact with the desktop" checked use up the active windows session. Services without this checked share memory in a default non-interactive session. Services run under a specific account have their own desktop heap allocation.

In essense, as processes are run by any particular "user" the memory allocation for that user is used up. If the processes are not cleaned up appropriately (e.g. terminated when complete) the allocation for that process is not released.

I've been able to mitigate the frequency of the problem by assigning the hMail service to a particular user. This is something I had intended on doing anyway, but I'll need to go back and check to make sure that the security and auditing settings are valid once all users have been created.

I had one thought as to the cause of the process build-up: could it be due to the remote server disconnecting when hMail takes too long to process the message? Basically the timeout being reached and the remote server disconnecting? I think this is a possibility because I had to set up my custom scripts to run while the SMTP session is still active. I'll have to run some tests to try and figure out if this is what's happening.

Whether or not this is the case, I'm thinking of switching to the

Exec() method of the WScript object. That way I can terminate the object if it runs for too long. This will, however, require more care in setting up the scripts because I won't be able to just wait for the program to exit ... I'll have to actively monitor it to see when execution is complete. The Run() method waits for the program before continuing script execution while the Exec() method executes the program then continues on with the script.References:

Searched google for "windows 2000 SharedSection"

- PRB: User32.dll or Kernel32.dll fails to initialize

- "Out of Memory" error message appears when you have a large number of programs running

- Unexpected behavior occurs when you run many processes on a computer that is running SQL Server

Update 2006-08-20:

A quick test of the server indicates that the problem is not due to a premature disconnect. I would like to know why these processes aren't ending, but I think it's more important in the meantime to make sure they're killed if they take too long.

Monday, August 07, 2006

hMail: Anti-Spam/Anti-Virus Techniques

Switching to hMail

When I switched to hMail I had to make some changes to the way I check for bad mail on the server (right now sender sender address validity and viruses). One of the biggest problems with the built-in functionality of hMail, I find, is that the only option it uses when a positive result it returned (e.g. bad e-mail address or virus found) is that the message is dropped. There is no option using the built-in functionality to add a header or redirect to a new mailbox. This is not acceptable to me as I like to be able to confirm how well my bad-mail filteres are working.

The other problem is that hMail doesn't have built-in support for running external programs (beyond an external virus scanner; see above for why I don't use this). It does, however, include an event script that uses Microsoft scripting (VBScript, JScript, and maybe other WSH processors though I haven't confirmed that) to allow for flexibility in mail handling. The script can be used to run external programs (via the

Modifications to my scripts

Using the event script I am able to call external scripts by creating an instance of the

CheckAddr.pl was modified to add a new argument option of "hMail" which is pretty much the same as the Mercury argument except that instead of two additional arguments (the mail file and the result file) only one additional argument is needed (the mail file). Once the script is done running I use the return code to determine the value of a the

Virus checking by F-Prot is still accomplished in the same way as before, calling a batch file that executes F-Prot. Once the batch file has exited the program then checks for a log file based on the name of the message file. The presence or absence of this log file indicates whether or not a virus was found and determines the value of the

I use the server rules to redirect any message marked as bad to the spam dump account.

Problem with the event model

I have one issue with the event model used in the event script ... the only viable event on which to process messages is

I have to use this particular event handler because the server rules are run after a message is accepted (

I'd like to see a new event added to future version of the server that occurs after the SMTP session but before any further processing by the server.

When I switched to hMail I had to make some changes to the way I check for bad mail on the server (right now sender sender address validity and viruses). One of the biggest problems with the built-in functionality of hMail, I find, is that the only option it uses when a positive result it returned (e.g. bad e-mail address or virus found) is that the message is dropped. There is no option using the built-in functionality to add a header or redirect to a new mailbox. This is not acceptable to me as I like to be able to confirm how well my bad-mail filteres are working.

The other problem is that hMail doesn't have built-in support for running external programs (beyond an external virus scanner; see above for why I don't use this). It does, however, include an event script that uses Microsoft scripting (VBScript, JScript, and maybe other WSH processors though I haven't confirmed that) to allow for flexibility in mail handling. The script can be used to run external programs (via the

WScript object) and perform general message-handling (using the built-in hMail object model). With this interface I can use custom scripts to run additional processing tasks on any message that arrives at my server.Modifications to my scripts

Using the event script I am able to call external scripts by creating an instance of the

WScript object and using the Run() method to execute a command. I only had to make minimal changes to my scripts to enable them to be run in this way.CheckAddr.pl was modified to add a new argument option of "hMail" which is pretty much the same as the Mercury argument except that instead of two additional arguments (the mail file and the result file) only one additional argument is needed (the mail file). Once the script is done running I use the return code to determine the value of a the

X-Eclectic-AddrCheck header. This header is added to the message using the HeaderValue() method of the hMail message object.Virus checking by F-Prot is still accomplished in the same way as before, calling a batch file that executes F-Prot. Once the batch file has exited the program then checks for a log file based on the name of the message file. The presence or absence of this log file indicates whether or not a virus was found and determines the value of the

X-Eclectic-VirScan message header. If the log file does exist it indicates a virus was found and the file is parsed for the line indicating what virus was detected, which is then prepended to the subject of the message. The log file is then deleted.I use the server rules to redirect any message marked as bad to the spam dump account.

Problem with the event model

I have one issue with the event model used in the event script ... the only viable event on which to process messages is

OnAcceptMessage, which is run after the message is delivered but before the SMTP server disconnects. Any significant delay in processing the message (especially with my custom scripts called from the event script) could cause the SMTP session to time out.I have to use this particular event handler because the server rules are run after a message is accepted (

OnAcceptMessage) but before message delivery (OnDeliverMessage). Since I'm adding a header to the message based on the results of my custom scripts and then using server rules to determine whether or not to redirect those message to my spam dump I don't have any other choice at the moment. I'd much rather be able to run my custom scripts after the SMTP session has completely ended.I'd like to see a new event added to future version of the server that occurs after the SMTP session but before any further processing by the server.

Sunday, August 06, 2006

Mail Server Setup

For a while I was using Mercury/32 as my mail server. It's a great little program and very robust. But (based on what I've read on the support list) depending on the specific setup it seems it can be a little flaky with regard to stability. I've started to run into problems my self. But beyond that fact there are a few things about the program that I'm not too happy with. The biggest of those is the fact that M32 doesn't have an option to run as a service. Sure it can be addressed via various run-as-a-service programs ... but it's not quite the same and generally makes management a little more difficult.

So I recently switched to a new mail server, hMail Server. What brought it to my attention was it's database capabilities. I was actually looking for a program that stored the actual messages in a database. Sure it would be a lot of overhead, but there would be so much more flexibility for access and archiving ... depending on how the database was set up. hMail isn't quite what I was looking for, but it's integration with Windows made it pretty good. No need to get into the details, you can get that from the site. So I'll just leave it at the fact that I've been very happy so far. Plus, I've been able to implement just about all the functionality I was using in M32 ... making it a good drop-in replacement (well ... almost since I'm using IMAP).

M32 is supposed to be undergoing an overhaul right now. It'll be interesting to see how it progresses. I'll have to give it another look once the next major revision is released. If nothing else the native support for running as a service will be a most welcome change.

So I recently switched to a new mail server, hMail Server. What brought it to my attention was it's database capabilities. I was actually looking for a program that stored the actual messages in a database. Sure it would be a lot of overhead, but there would be so much more flexibility for access and archiving ... depending on how the database was set up. hMail isn't quite what I was looking for, but it's integration with Windows made it pretty good. No need to get into the details, you can get that from the site. So I'll just leave it at the fact that I've been very happy so far. Plus, I've been able to implement just about all the functionality I was using in M32 ... making it a good drop-in replacement (well ... almost since I'm using IMAP).

M32 is supposed to be undergoing an overhaul right now. It'll be interesting to see how it progresses. I'll have to give it another look once the next major revision is released. If nothing else the native support for running as a service will be a most welcome change.

Argh! MTU strikes again!

Every time I set up a PC I run into networking problems. Invariably they're related to default MTU in Windows. Usually I never notice, until I need to access Microsoft.com whose site uses a lower packet size than the Windows default (thanks MS!) .

The whole thing is just very annoying and should'nt be such a pain. For some reason my laptop has no problems, but every desktop I've set up at home does.

My setup is a Windows 2000/XP multi-pc network on a linksys DSL/switch. I've gone with an MTU of 1454 to maximize compatibility. But it sure does suck that I'm not getting the most efficient throughput. Does anyone know how to deal with this without having to modify the registry of each PC set up?

The whole thing is just very annoying and should'nt be such a pain. For some reason my laptop has no problems, but every desktop I've set up at home does.

My setup is a Windows 2000/XP multi-pc network on a linksys DSL/switch. I've gone with an MTU of 1454 to maximize compatibility. But it sure does suck that I'm not getting the most efficient throughput. Does anyone know how to deal with this without having to modify the registry of each PC set up?

What's it about?

I've been doing a lot of nothing in this blog. Partly because I haven't really spent much time thinking about what I want to do with it. Partly because I've significantly pared down the work I do at home.

I've been trying to ramp up my productivity at home, though, and I think this blog can help. I've been using my other blog to document what I've been doing at work. Specifically any problems I've encountered and solutions I've found. I think doing the same for this blog will be a good start.

So starting today I hope to start spending more time filling out the content here. Wish me luck.

I've been trying to ramp up my productivity at home, though, and I think this blog can help. I've been using my other blog to document what I've been doing at work. Specifically any problems I've encountered and solutions I've found. I think doing the same for this blog will be a good start.

So starting today I hope to start spending more time filling out the content here. Wish me luck.

Tuesday, March 22, 2005

more perl fun

Two fixes in two days for my mail reader. This is some kind of record!

The attachment downloader was having some problems when the filename included odd characters. Realized that a) I was not URL-encoding the filename in the attachment link and b) I was unencoding the filename in the script that sends the file (which is completely unnecessary).

Fixed and fixed.

The attachment downloader was having some problems when the filename included odd characters. Realized that a) I was not URL-encoding the filename in the attachment link and b) I was unencoding the filename in the script that sends the file (which is completely unnecessary).

Fixed and fixed.

Subscribe to:

Posts (Atom)

About Me

Blog Archive

-

►

2006

(8)

- ► 11/12 - 11/19 (1)

- ► 08/20 - 08/27 (2)

- ► 08/13 - 08/20 (1)

- ► 08/06 - 08/13 (4)

-

►

2005

(3)

- ► 03/20 - 03/27 (3)

-

►

2004

(6)

- ► 12/12 - 12/19 (1)

- ► 12/05 - 12/12 (5)