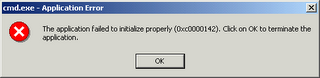

I was able to find some information (see references) that indicate that this is basically a desktop memory heap overflow. The server needs a discreet amount of memory for each object that is created on the desktop. In this case each command line window. But there is a limit to the number of objects that can be allocated (a limit on the total memory used by the desktop heap).

Every desktop object on the system has a desktop heap associated with it. The desktop object uses the heap to store menus, hooks, strings, and windows. The system allocates desktop heap from a system-wide 48-MB buffer. In addition to desktop heaps, printer, and font drivers also use this buffer. (1)This is a problem for services as well. But to compound the problem any service which runs under the localsystem account with "Allow service to interact with the desktop" checked use up the active windows session. Services without this checked share memory in a default non-interactive session. Services run under a specific account have their own desktop heap allocation.

In essense, as processes are run by any particular "user" the memory allocation for that user is used up. If the processes are not cleaned up appropriately (e.g. terminated when complete) the allocation for that process is not released.

I've been able to mitigate the frequency of the problem by assigning the hMail service to a particular user. This is something I had intended on doing anyway, but I'll need to go back and check to make sure that the security and auditing settings are valid once all users have been created.

I had one thought as to the cause of the process build-up: could it be due to the remote server disconnecting when hMail takes too long to process the message? Basically the timeout being reached and the remote server disconnecting? I think this is a possibility because I had to set up my custom scripts to run while the SMTP session is still active. I'll have to run some tests to try and figure out if this is what's happening.

Whether or not this is the case, I'm thinking of switching to the

Exec() method of the WScript object. That way I can terminate the object if it runs for too long. This will, however, require more care in setting up the scripts because I won't be able to just wait for the program to exit ... I'll have to actively monitor it to see when execution is complete. The Run() method waits for the program before continuing script execution while the Exec() method executes the program then continues on with the script.References:

Searched google for "windows 2000 SharedSection"

- PRB: User32.dll or Kernel32.dll fails to initialize

- "Out of Memory" error message appears when you have a large number of programs running

- Unexpected behavior occurs when you run many processes on a computer that is running SQL Server

Update 2006-08-20:

A quick test of the server indicates that the problem is not due to a premature disconnect. I would like to know why these processes aren't ending, but I think it's more important in the meantime to make sure they're killed if they take too long.

No comments:

Post a Comment